what is a supercomputer? and what all it can do?

Introduction

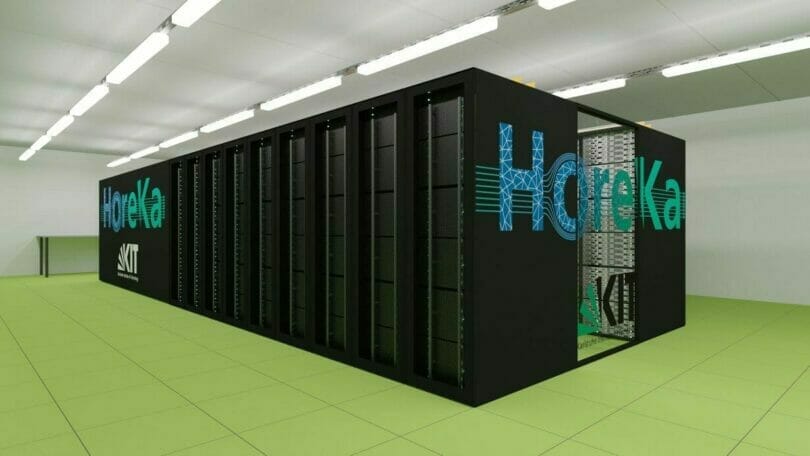

Supercomputers are very powerful computers used to solve scientific and engineering problems. They have been used primarily for scientific and engineering work requiring exceedingly large datasets or calculations. They were originally developed for the computational research of the nuclear fusion and fission power fields in particle physics and astrophysics.

Among other applications, supercomputers are used in many technological fields such as medicine and weather forecasting. A supercomputer is a computer which is able to perform tasks that would otherwise be impossible without its enormous computing power; it is capable of specific computation techniques not possible on any other type of computer.

Advances in technology have led to advances in computing speed, resource efficiency and accuracy, memory use and energy consumption; these increases in performance over time have made the computer more beneficial to its users than ever before. However, supercomputers are challenging to build compared with ordinary computers because they must be able to solve extremely complex problems that any single person or group cannot solve. Therefore, a key characteristic of supercomputers is their ability to solve these problems on very high levels of complexity (high-level) without human intervention.

The term “supercomputer” refers to any computer with a processing speed greater than 10 times the number of transistors on an integrated circuit (IC) or approximately 1,000 times faster than the fastest microprocessors commonly available at the time. There are two primary types in computer science: mainframe computers (which dominate most industrial computing) and blade servers (which dominate most commercial computing). Other types include single-chip miniaturized personal computers called workstations or microservers and miniaturized servers in which a single chip can contain a processor cluster containing multiple CPUs.

A supercomputer may also refer specifically to a type of working computer system designed specifically for scientific purposes that run on electricity generated by fossil fuels such as coal or natural gas, usually combined with an enormous amount of electricity storage capacity stored somewhere else (such as liquid helium). This storage capacity can either be supplied by batteries or pumped hydrogen energy storage systems using compressed air energy storage methods or electromagnetic energy storage methods using capacitors.

2. History of Supercomputers

Supercomputers are a class of powerful, highly automated data centers. Supercomputers are generally classified by the performance of their individual processors, also known as nodes. They are often referred to as supercomputing centers or supercomputer facilities.

Supercomputers today can perform calculations that would have been impossible for a human to complete in the past. However, it’s worth noting that some supercomputers are still in operation, including the IBM Sequoia and Cray Y-MP systems at the National Center for Supercomputing Applications (NCSA) at the University of Illinois at Urbana-Champaign.

3. Types of Supercomputers

The supercomputer is a term used for a group of computers that have the ability to do many calculations simultaneously with much greater speed than their individual components.

A computer can be called super if it is faster than the fastest processor used in regular computers. For example, a Pentium III 3.0 GHz system may be faster than an Athlon XP 2.8 GHz system. Still, the Pentium III has more computational power and thus should be more valuable as a supercomputer.

4. Modern supercomputers

Modern supercomputers are now so powerful that they can perform image processing, image analysis, speech synthesis, language translation, scientific simulations and data analytics. They are also used to process large amounts of data in real-time or near-real-time. As a result, computers of this type can routinely do tasks that would previously take hundreds or thousands of years to perform.

Supercomputers were initially built as Research Supercomputers, intended to be used for research and development. These systems were built with the input and output capabilities needed to handle the machine’s data and create a new output.

The first commercially available supercomputer was the Scientific Data System (SDS) in 1958 by Control Data Corporation (CDC). The SDS had a peak performance rate of 2 petaFLOPS (pFLOPS) per second, comparable to that needed to run a transistorized electronic computer for about seven hours. This allowed scientists and engineers at CDC to build systems that could do many of the most complex calculations on Earth. The CDC SDS became the basis for other high-performance computers such as the IBM 704 series and IBM 7090 series, leading to a boom in information technology around this time period.

Today’s supercomputers are vastly different from older models due to advances in technology. For example, newer supercomputers can use parallel processing and general-purpose computing on distributed shared memory architectures (GPSHA). This allows for high-performance computing on a large scale without connecting all parts physically or sharing similar power supplies.

An example would involve using GPSHA nodes running Oracle RACF software rather than multiple mainframes connected by high-speed fiber optic cables or even network connections between individual nodes within one supercomputer cluster capable of handling millions of operations per second each.

The term “supercomputer” is generally used to describe a computer with immense processing power. However, this term is often used colloquially rather than formally when discussing high-performance computers used by scientists and engineers working in fields where computing power is essential, such as physics, chemistry, engineering, information sciences, etc.

5. The Future of Supercomputers

Supercomputers are the fastest machines the world has ever seen. They are very large, very powerful, and very expensive. It’s not just a fancy way of saying that supercomputers are powerful enough to perform any task if you tell them how to do it. Some supercomputers can solve a problem in seconds, while others can solve a problem in weeks or months.

All supercomputers have one thing in common: they often have the processing power of any other computer on earth. As a result, supercomputers can quickly process massive amounts of data and access it at high speeds.

Supercomputers are used for everything — from finding new planets to predicting weather patterns. In addition, these computers can simulate medical treatments and prepare invoices for insurance companies before they pay out money.

Supercomputers play a significant role in many aspects of our lives, even if we don’t realize it. For example, the software powering many avionics systems on planes is created by supercomputers. Additionally, supercomputer software gathers satellite data that helps sailors navigate. Even financial traders rely on supercomputer software to automate trading and make huge profits for their stockbrokers.

Some people think that it’s impossible for anyone to make money from a computer science course because computers do no more than accept instructions from you and spit out results as soon as ordered . . . but this is not true at all! On the contrary, computer science is one of the most profitable fields because there is a great deal more than simply programming instructions you can use on your computer! For example, • Engineering algorithms that turn your computer into an intelligent machine • Designing machine learning systems that learn based on our personal experiences • Creating programs that help us manage our health without medication or doctors • Developing interfaces between physical devices (such as electronic watches or implantable medical devices) and artificial intelligence systems • The field of design is also extremely lucrative because designers have all kinds of opportunities to work with companies around the world who need designs or marketing materials developed with high-end computer science capabilities such as artificial intelligence, graphics, sound effects, etc.

6. Supercomputing today

Supercomputers were invented in the 1950s to deal with a new kind of calculation called the fluid dynamics theory. The term is used to refer to any machine that can perform complex mathematical calculations, regardless of its size or speed. The current fastest supercomputer is the IBM “Turing” machine, installed at the company’s Petri.

#1 computer lab in 1971 and capable of performing 1 quadrillion (1,000,000,000,000,000) floating-point operations per second on a 100 teraflop (1 trillion floating point operations per second) system.

It stands for TeraFlop Equivalent Number and refers to a machine capable of performing 1 quadrillion (1,000,000,000,000,000) floating-point operations per second on a 100 teraflop (1 trillion floating-point operations per second) system. This is an estimate based on a wide range of published measurements.

A teraflop is one billion floating-point operations per second, and one teraflop is one trillion floating-point operations per second.

A single computer will be able to do up to 10^34 computations in about 1 nanosecond – meaning it can perform 10**34 mathematical tasks in one-millionth of a second!

5. Conclusion

A supercomputer is a computer that is exceptionally powerful and fast. It is typically used for complex calculations and other demanding tasks. Supercomputers are generally measured by their power, which is the ability to perform quickly and accurately calculations.

A supercomputer is any of a class of extremely powerful computers. The term is commonly applied to the fastest high-performance systems available. Such computers have been used primarily for scientific and engineering work requiring exceedingly high processing speeds and large memory capacities.

Computers remain a challenge, especially compared to similarly wealthy but less efficient desktop computers.

The term “supercomputer” is used mainly to refer to high-performance computers that require large amounts of memory and compute power.

High-performance computers are not used for scientific computations because they are too slow to be practical. Instead, they perform large volume data processing tasks such as data storage and web farm management.

- About me

- Affiliate Disclosure

- Cart

- Checkout

- My account

- Privacy Policy

- Shop

- Short Stories

- Store

- Terms and Conditions